Music

Trailers

DailyVideos

India

Pakistan

Afghanistan

Bangladesh

Srilanka

Nepal

Thailand

StockMarket

Business

Technology

Startup

Trending Videos

Coupons

Football

Search

Download App in Playstore

Download App

Best Collections

Technology

As businesses look for ways to give employees flexible work environments, whether on desktops or mobile devices, in the office or out in the field, IT shops have had to scramble to consolidate the management of hardware platforms using a single console.

With that IT goal in mind, Microsoft in 2011 launched its Intune cloud service to address the emerging enterprise mobility management (EMM) needs of the workplace.

- Details

- Category: Technology

Read more: What is Microsoft’s Intune – and how well does the UEM tool really work

Write comment (90 Comments)IT contractor pilot fish's project at a big company is winding down, so the company cuts him loose -- but also offers him a job as a developer doing the same thing he was doing before.

"With no other real options except unemployment, I decided to accept the position," says fish.

"Upon arriving for my first day of work as a regular employee, basically working on the same projects I was working on as a contractor the previous Friday, I figured there would not be any issues with my new job."

It doesn't take long to get his computer set up, and fish soon starts back in on his project, which means building stored procedures for database transactions in exactly the same way he's been doing for months.

- Details

- Category: Technology

Read more: Why we (don't always) love the squeaky wheel

Write comment (93 Comments)If you don&t think the healthcare market is prepping for the radical transformations that remote care, persistent diagnostics, as well as and monitoring and improving targeted treatments are going to bring to the industry, think again.

Healthcare companies are steeling themselves for the shift in healthcare services in the most desperate way they can — by launching venture funds. The latest to make the move is Cigna, the multi-billion-dollar healthcare insurer which is now launching a $250 million venture fund called Cigna Ventures.

Starting a venture fund has often been the last, worst best hope of corporations that have been overtaken by dramatic changes in technological platforms. At the tail end of the last internet bubble, as everything was about to fall apart, big companies began to realize that technology was bringing hordes of new barbarians to the gate. And they swung into action to finance these companies, and get a window into them, even as the hordes were immolating themselves on pyres of wasted cash and incomprehensible business models.

This time, corporations in industries like health insurance may not have the luxury of startup ignorance to protect them from the slow march of progress.

The mighty combination of Amazon, Berkshire Hathaway and JP Morgan Chaseloom large in the visions (or nightmares) of healthcare services providers — and the potential for a single-payor healthcare system in the U.S. can&t be far behind. And while one (ahem… single payor) is almost surely the stuff of nightmares, the announcement of a new chief operating officer is making the venture increasingly real.

Cigna says it will focus on investing in companies that will bring improved care quality, affordability, choice and greater simplicity to customers and clients in three strategic areas: insights and analytics; digital health and retail; and care delivery and management.

Companies in the portfolio include Omada Health, a digital therapeutics company treating chronic diseases; Prognos, a predictive analytics company for healthcare; Contessa Health, a home-patient care service; Mdlive, which provides remote health consultations; and Cricket Health, a special kidney care provider.

&Cignacommitment to improving the health, well-being and sense of security of the people we serve is at the front and center of everything we do,& said Tom Richards, senior vice president and global lead, strategy and business development at Cigna, in a statement. &The venture fund will enable us to drive innovation beyond our existing core business operations, and incubate new ideas, opportunities and relationshipsthat have the potential for long-term business growth and to help our customers.&

Cigna has been spending like a drunken sailor to acquire businesses in an effort to build out an organization to withstand the assault of Amazonians, the government and upstart startups alike. Its stock fell off a cliff in March after it announced the $67 billion acquisition of ExpressScripts, months before Amazon acquired PillPack in a roughly $1 billion transaction.

Cigna had invested in startups before the creation of this new venture fund. According to Crunchbase, the companyinvestment activity in startupland dates back to 2016.

&Our partnership with Cigna has been about so much more than capital,& said Sean Duffy, co-founder and CEO of Omada. &The ability to collaborate with, learn from, and integrate deeply with a health services company so dedicated to delivering a 21st-century care experience to its customers and clients has enabled us to accelerate innovation, advance our capabilities, and grow our customer base.&

- Details

- Category: Technology

Read more: As market pressures loom, healthcare giant Cigna launches a $250 million venture fund

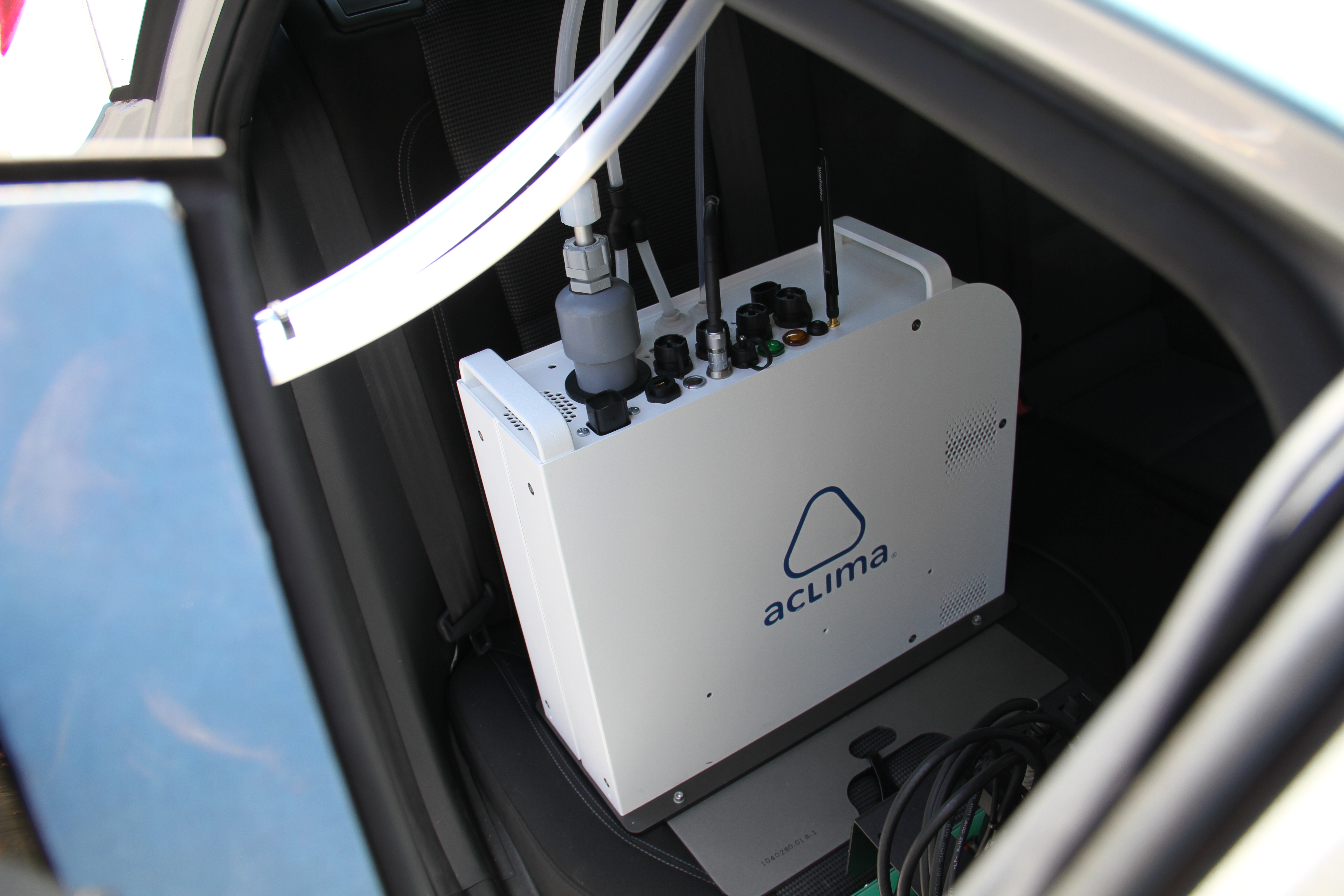

Write comment (100 Comments)Aclima, a San Francisco-based startup building Internet-connected air quality sensors has announced plans to integrate its mobile sensing platform into Googleglobal fleet of Street View vehicles.

Google uses the Street View cars to map the land for Google Maps. Starting with 50 cars in Houston, Mexico City and Sydney, Aclima will capture air quality data by generating snapshots of carbon dioxide (CO2), carbon monoxide (CO), nitric oxide (NO), nitrogen dioxide (NO2), ozone (O3), and particulate matter (PM2.5)while the Google cars roam the streets. The idea is to ascertain where there may be too much pollution and other breathing issues on a hyper local level in each metropolitan area. The data will thenbe made available as a public dataset on Google BigQuery.

Aclima has had a close relationship with Google for the past few years and this is not its first ride in Street View cars. The startup deployed its sensors in Londonearlier this year using Googlevehicles and three years ago started working with the tech giant to ascertain air health within Googleown campus as well as around the Bay Area.

&All that work culminated in a major scientific study,&Aclima founder Davida Herzl told TechCrunch, referring to a study published in Environmental Science and Technology revealing air pollution levels varied in difference five to eight times along a city street. &We found you can have the best air quality and the worst air quality all on the same street…Understanding that can help with everything from urban planning to understanding your personal exposure

That initial research now enables Aclima to scale up with GoogleStreet View cars in the hopes of gathering even more data on a global basis. Google Street View cars cover the roads in all seven continents and have driven over 100,000 miles in just the state of California collecting over one billion data pointssince the initial project began with Aclima in 2015.

The first Street View cars with the updated Aclima sensors will hit the road this Fall in the Western United States, as well as in Europe, according to the company.

&These measurements can provide cities with new neighborhood-level insights to help cities accelerate efforts in their transition to smarter, healthier cities,&Karin Tuxen-Bettman, Program Manager for Google Earth Outreach said in a statement.

- Details

- Category: Technology

Helping businesses bring more firepower to the fight against AI-fuelled disruptors is the name of the game for Integrate.ai, a Canadian startup thatannouncing a $30M Series A today.

The round is led by Portag3 Ventures . Other VCs include Georgian Partners, Real Ventures, plus other (unnamed) individual investors also participating. The funding will be used for a big push in the U.S. market.

Integrate.ai early focus has been on retail banking, retail and telcos, says founder Steve Irvine, along with some startups which have data but aren&t necessarily awash with AI expertise to throw at it. (Not least because tech giants continue to hoover up talent.)

Its SaaS platform targets consumer-centric businesses — offering to plug paying customers into a range of AI technologies and techniques to optimize their decision-making so they can respond more savvily to their customers. Aka turning &high volume consumer funnels& into &flywheels&, if thata mental image that works for you.

In short itselling AI pattern spotting insights as a service via a &cloud-based AI intelligence platform& — helping businessesmove from &largely rules-based decisioning& to &more machine learning-based decisioning boosted by this trusted signals exchange of data&, as he puts it.

Irvine gives the example of a large insurance aggregator the startup is working with to optimize the distribution of gift cards and incentive discounts to potential customers — with the aim of maximizing conversions.

&Obviously they&ve got a finite amount of budget for those — they need to find a way to be able to best deploy those… And the challenge that they have is they don&t have a lot of information on people as they start through this funnel — and so they have what is a classic ‘cold start& problem in machine learning. And they have a tough time allocating those resources most effectively.&

&One of the things that we&ve been able to help them with is to, essentially, find the likelihood of those people to be able to convert earlier by being able to bring in some interesting new signal for them,& he continues. &Which allows them to not focus a lot of their revenue or a lot of those incentives on people who either have a low likelihood of conversion or are most likely to convert. And they can direct all of those resources at the people in the middle of the distribution — where that type of a nudge, that discount, might be the difference between them converting or not.&

He says feedback from early customers suggests the approach has boosted profitability by around 30% on average for targeted business areas — so the pitch is businesses are easily seeing the SaaS easily paying for itself. (In the cited case of the insurer, he says they saw a 23% boost in performance — against what he couches as already &a pretty optimized funnel&.)

&We find pretty consistent [results] across a lot of the companies that we&re working with,& he adds.&Most of these decisions today are made by a CRM system or some other more deterministic software system that tends to over attribute people that are already going to convert. So if you can do a better job of understanding peoplebehaviour earlier you can do a better job at directing those resources in a way thatgoing to drive up conversion.&

The former Facebook marketing exec, who between 2014 and 2017 ran a couple of global marketing partner programs at Facebook and Instagram, left the social network at the start of last year to found the business — raising $9.6M in seed funding in two tranches, according to Crunchbase.

The eighteen-month-old Toronto based AI startup now touts itself as one of the fastest growing companies in Canadian history, with a headcount of around 40 at this point, and a plan to grow staff 3x to 4x over the next 12 months. Irvine is also targeting growing revenue 10x, with the new funding in place — gunning to carve out a leadership position in the North American market.

One key aspect of Integrate.aiplatform approach means its customers aren&t only being helped to extract more and better intel from their own data holdings, via processes such as structuring the data for AI processing (though Irvine says italso doing that).

The idea is they also benefit from the wider network, deriving relevant insights across Integrate.aipooled base of customers — in a way that does not trample over privacy in the process. At least, thatthe claim.

(Itworth noting Integrate.ainetwork is not a huge one yet, with customers numbering in the &tens& at this point — the platform only launched in alpha around 12 months ago and remains in beta now. Named customers include the likes of Telus, Scotiabank, and Corus.)

So the idea is to offer an alternative route to boost business intelligence vs the &traditional& route of data-sharing by simply expanding databases — because, asIrvine points out, literal data pooling is &coming under fire right now — because it is not in the best interests, necessarily, of consumers; theresome big privacy concerns; therea lot of security risk which we&re seeing show up&.

What exactly is Integrate.ai doing with the data then Irvine says itsTrusted Signals Exchange platform uses some &pretty advanced techniques in deep learning and other areas of machine learning to be able to transfer signals or insights that we can gain from different companies such that all the companies on our platform can benefit by delivering more personalized, relevant experiences&.

&But we don&t need to ever, kind of, connect data in a more traditional way,& he also claims. &Or pull personally identifiable information to be able to enable it. So it becomes very privacy-safe and secure for consumers which we think is really important.&

He further couches the approach as &pretty unique&, adding it &wouldn&t even have been possible probably a couple of years ago&.

From Irvinedescription the approach sounds similar to the data linking (via mathematical modelling) route being pursued by another startup, UK-based InfoSum— which has built a platform that extracts insights from linked customer databases while holding the actual data in separate silos. (And InfoSum, which was founded in 2016, also has a founder with a behind-the-scenes& view on the inners workings of the social web — in the form of DatasiftNic Halstead.)

Facebookown custom audiences product, which lets advertisers upload and link their customer databases with the social networkdata holdings for marketing purposes is the likely inspiration behind all these scenes.

Irvine says he spotted the opportunity to build this line of business having been privy to a market overview in his role at Facebook, meeting with scores of companies in his marketing partner role and getting to hear high level concerns about competing with tech giants. He says the Facebook job also afforded him an overview on startup innovation — and there he spied a gap for Integrate.ai to plug in.

&My team was in 22 offices around the world, and all the major tech hubs, and so we got a chance to see any of the interesting startups that were getting traction pretty quickly,& he tells TechCrunch. &That allowed us to see the gaps that existed in the market. And the biggest gap that I saw… was these big consumer enterprises needed a way to use the power of AI and needed access to third party data signals or insights to be able to enabled them to transition to this more customer-centric operating model to have any hope of competing with the large digital disruptors like Amazon.

&That was kind of the push to get me out of Facebook, back from California to Toronto, Canada, to start this company.&

Again on the privacy front, Irvine is a bit coy about going into exact details about the approach. But is unequivocal and emphatic about how ad tech players are stepping over the line — having seen into that pandorabox for years — so his rational to want to do things differently at least looks clear.

&A lot of the techniques that we&re using are in the field of deep learning and transfer learning,& he says. &If you think about the ultimate consumer of this data-sharing, that is insight sharing, it is at the end these AI systems or models. Meaning that it doesn&t need to be legible to people as an output — all we&re really trying to do is increase the map; make a better probabilistic decision in these circumstances where we might have little data or not the right data that we need to be able to make the right decision. So we&re applying some of the newer techniques in those areas to be able to essentially kind of abstract away from some of the more sensitive areas, create representations of people and patterns that we see between businesses and individuals, and then use that as a way to deliver a more personalized predictions — without ever having to know the individualpersonally identifiable information.&

&We do do some work with differential privacy,& he adds when pressed further on the specific techniques being used. &Theresome other areas that are just a little bit more sensitive in terms of the work that we&re doing — but a lot of work around representative learning and transfer learning.&

Integrate.ai has published a whitepaper — for a framework to &operationalize ethics in machine learning systems& — and Irvine says itbeen called in to meet and &share perspectives& with regulators based on that.

&I think we&re very GDPR-friendly based on the way that we have thought through and constructed the platform,& he also says when asked whether the approach would be compliant with the European Uniontough new privacy framework (which also places some restrictions on entirely automated decisions when they could have a significant impact on individuals).

&I think you&ll see GDPR and other regulations like that push more towards these type of privacy preserving platforms,& he adds. &And hopefully away from a lot of the really creepy, weird stuff that is happening out there with consumer data that I think we all hope gets eradicated.&

For the record, Irvine denies any suggestion that he was thinking of his old employer when he referred to &creepy, weird stuff& done with peopledata — saying: &No, no, no!&

&What I did observe when I was there in ad tech in general, I think if you look at that landscape, I think there are many, many… worse examples of what is happening out there with data than I think the ones that we&re seeing covered in the press. And I think as the light shines on more of that ecosystem of players, I think we will start to see that the ways they&ve thought about data, about collection, permissioning, usage, I think will change drastically,& he adds.

&And the technology is there to be able to do it in a much more effective way without having to compromise results in too big a way. And I really hope that that sea change has already started — and I hope that it continues at a much more rapid pace than we&ve seen.&

But while privacy concerns might be reduced by the use of an alternative to traditional data-pooling, depending on the exact techniques being used, additional ethical considerations are clearly being dialled sharply into view if companies are seeking to supercharge their profits by automating decision making in sensitive and impactful areas such as discounts (meaning some users stand to gain more than others).

The point is an AI system thatexpert at spotting the lowest hanging fruit (in conversion terms) could start selectively distributing discounts to a narrow sub-section of users only — meaning other people might never even be offered discounts.

In short, it risks the platform creating unfair and/or biased outcomes.

Integrate.ai has recognized the ethical pitfalls, and appears to be trying to get ahead of them — hence its aforementioned ‘Responsible AI in Consumer Enterprise& whitepaper.

Irvine also says that raising awareness around issues of bias and ðical AI& — and promoting &more responsible use and implementation& of its platform isanother priority over the next twelve months.

&The biggest concern is the unethical treatment of people in a lot of common, day-to-day decisions that companies are going to be making,& he says of problems attached to AI. &And they&re going to do it without understanding, and probably without bad intent, but the reality is the results will be the same — which is perpetuating a lot of biases and stereotypes of the past. Which would be really unfortunate.

&So hopefully we can continue to carve out a name, on that front, and shift the industry more to practices that we think are consistent with the world that we want to live in vs the one we might get stuck in.&

The whitepaper was produced by a dedicated internal team, which he says focuses on AI ethics and fairness issues, and is headed up by VP of product - strategy, Kathryn Hume.

&We&re doing a lot of research now with the Vector Institute for AI… on fairness in our AI models, because what we&ve seen so far is that — if left unattended, if all we did was run these models and not adjust for some of the ethical considerations — we would just perpetuate biases that we&ve seen in the historical data,& he adds.

&We would pick up patterns that are more commonly associated with maybe reinforcing particular stereotypes… so we&re putting a really dedicated effort — probably abnormally large, given our size and stage — towards leading in this space, and making sure that thatnot the outcome that gets delivered through effective use of a platform like ours. But actually, hopefully, the total opposite: You have a better understanding of where those biases might creep in and they could be adjusted for in the models.&

Combating unfairness in this type of AI tool would mean a company having to optimize conversion performance a bit less than it otherwise could.

Though Irvine suggests thatlikely just in the short term. Over the longer term he argues you&re laying the foundations for greater growth — because you&re building a more inclusive business, saying:&We have this conversational a lot. &I think itgood for business, itjust the time horizon that you might think about.&

&We&ve got this window of time right now, that I think is a really precious window, where people are moving over from more deterministic software systems to these more probabilistic, AI-first platforms… They just operate much more effectively, and they learn much more effectively, so there will be a boost in performance no matter what. If we can get them moved over right off the bat onto a platform like ours that has more of an ethical safeguard, then they won&t notice a drop off in performance — because it&ll actually be better performance. Even if itnot optimized fully for short term profitability,& he adds.

&And we think, over the long term itjust better business if you&re socially conscious, ethical company. We think, over time, especially this new generation of consumers, they start to look out for those things more… So we really hope that we&re on the right side of this.&

He also suggests that the wider visibility afforded by having AI doing the probabilistic pattern spotting (vs just using a set of rules) could even help companies identify unfairnesses they don&t even realize might be holding their businesses back.

&We talk a lot about this concept of mutual lifetime value — which is how do we start to pull in the signals that show that people are getting value in being treated well, and can we use those signals as part of the optimization. And maybe you don&t have all the signal you need on that front, and thatwhere being able to access a broader pool can actually start to highlight those biases more.&

- Details

- Category: Technology

Read more: Integrate.ai pulls in $30M to help businesses make better customer-centric decisions

Write comment (94 Comments)The European Union executive body is doubling down on its push for platforms to pre-filter the Internet, publishing a proposal today for all websites to monitor uploads in order to be able to quickly remove terrorist uploads.

The Commission handed platforms an informal one-hour rule for removing terrorist contentback in March. Itnow proposing turning that into a law to prevent such content spreading its violent propaganda over the Internet.

For now the ‘rule of thumb& regime continues to apply. But itputting meat on the bones of its thinking, fleshing out a more expansiveproposalfor a regulation aimed at &preventing the dissemination of terrorist content online&.

As per usual EU processes, the Commissionproposal would need to gain the backing of Member States and the EU parliament before it could be cemented into law.

One major point to note here is that existing EU law does not allow Member States to imposea general obligation on hosting service providers to monitor the information that users transmit or store. But in the proposal the Commission argues that, given the &grave risks associated with the dissemination of terrorist content&, states could be allowed to &exceptionally derogate from this principle under an EU framework&.

So itessentially suggesting that Europeans& fundamental rights might not, in fact, be so fundamental. (Albeit, European judges might well take a different view — and itvery likely the proposals could face legal challenges should they be cast into law.)

What is being suggested would also apply to any hosting service provider that offers services in the EU — ®ardless of their place of establishment or their size&. So, seemingly, not just large platforms, like Facebook or YouTube, but — for example — anyone hosting a blog that includes a free-to-post comment section.

Websites that fail to promptly take down terrorist content would face fines — with the level of penalties being determined by EU Member States (Germany has already legislated to enforce social media hate speech takedowns within 24 hours, setting the maximum fine at€50M).

&Penalties are necessary to ensure the effective implementation by hosting service providers of the obligations pursuant to this Regulation,& the Commission writes,envisaging the most severe penalties being reserved for systematic failures to remove terrorist material within one hour.

It adds: &When determining whether or not financial penalties should be imposed, due account should be taken of the financial resources of the provider.& So — for example — individuals with websites who fail to moderate their comment section fast enough might not be served the very largest fines, presumably.

The proposal also encourages platforms to develop &automated detection tools& so they can take what it terms &proactive measures proportionate to the level of risk and to remove terrorist material from their services&.

So the Commissioncontinued push for Internet pre-filtering is clear. (This is also a feature of the itscopyright reform — which is being voted on by MEPs later today.)

Albeit, itnot alone on that front.Earlier this year the UK government went so far as to pay an AI company to develop a terrorist propaganda detection tool that used machine learning algorithms trained to automatically detect propaganda produced by the Islamic State terror group — with a claimed &extremely high degree of accuracy&. (At the time it said it had not ruled out forcing tech giants to use it.)

What is terrorist content for the purposes of this proposals The Commission refers to an earlier EU directive on combating terrorism — which defines the material as &information which is used to incite and glorify the commission of terrorist offences, encouraging the contribution to and providing instructions for committing terrorist offences as well as promoting participation in terrorist groups&.

And on that front you do have to wonder whether, for example, some of U.S. president Donald Trumpcomments last year after the far right rally in Charlottesville where a counter protestor was murdered by a white supremacist — in which he suggested there were &fine people& among those same murderous and violent white supremacists might not fall under that ‘glorifying the commission of terrorist offences& umbrella, should, say, someone repost them to a comment section that was viewable in the EU…

Safe to say, even terrorist propaganda can be subjective. And the proposed regime will inevitably encourage borderline content to be taken down — having a knock-on impact upon online freedom of expression.

The Commission also wants websites and platforms to share information with law enforcement and other relevant authorities and with each other — suggesting the use of &standardised templates&, &response forms& and &authenticated submission channels& to facilitate &cooperation and the exchange of information&.

It tackles the problem of what it refers to as &erroneous removal& — i.e. content thatremoved after being reported or erroneously identified as terrorist propaganda but which is subsequently, under requested review, determined not to be — by placing an obligation on providers to have&remedies and complaint mechanisms to ensure that users can challenge the removal of their content&.

So platforms and websites will be obligated to police and judge speech — which they already do do, of course but the proposal doubles down on turning online content hosters into judges and arbiters of that same content.

The regulation also includes transparency obligations onthe steps being taken against terrorist content by hosting service providers — which the Commission claims will ensure &accountability towards users, citizens and public authorities&.

Other perspectives are of course available…

The Commission envisages all taken down content being retained by the host for a period of six months so that it could be reinstated if required, i.e. after a valid complaint — to ensure what it couches as &the effectiveness of complaint and review procedures in view of protecting freedom of expression and information&.

It also sees the retention of takedowns helping law enforcement — meaning platforms and websites will continue to be co-opted into state law enforcement and intelligence regimes, getting further saddled with the burden and cost of having to safely store and protect all this sensitive data.

(On that the EC just says:&Hosting service providers need to put in place technical and organisational safeguards to ensure the data is not used for other purposes.&)

The Commission would also create a system for monitoring the monitoring itproposing platforms and websites undertake — thereby further extending the proposed bureaucracy, saying it would establish a &detailed programme for monitoring the outputs, results and impacts& within one year of the regulation being applied; and report on the implementation and the transparency elements within two years; evaluating the entire functioning of it four years after itcoming into force.

The executive body says it consulted widely ahead of forming the proposals — including running an open public consultation, carrying out a survey of 33,500 EU residents, and talking to Member States& authorities and hosting service providers.

&By and large, most stakeholders expressed that terrorist content online is a serious societal problem affecting internet users and business models of hosting service providers,& the Commission writes. &More generally, 65% of respondent to the Eurobarometer survey considered that the internet is not safe for its users and 90% of the respondents consider it important to limit the spread of illegal content online.

&Consultations with Member States revealed that while voluntary arrangements are producing results, many see the need for binding obligations on terrorist content, a sentiment echoed in the European Council Conclusions of June 2018. While overall, the hosting service providers were in favour of the continuation of voluntary measures, they noted the potential negative effects of emerging legal fragmentation in the Union.

&Many stakeholders also noted the need to ensure that any regulatory measures for removal of content, particularly proactive measures and strict timeframes, should be balanced with safeguards for fundamental rights, notably freedom of speech. Stakeholders noted a number of necessary measures relating to transparency, accountability as well as the need for human review in deploying automated tools.&

- Details

- Category: Technology

Read more: Europe to push for one-hour takedown law for terrorist content

Write comment (91 Comments)Page 4208 of 5614

9

9