Music

Trailers

DailyVideos

India

Pakistan

Afghanistan

Bangladesh

Srilanka

Nepal

Thailand

Iraq

Iran

Russia

Brazil

StockMarket

Business

CryptoCurrency

Technology

Startup

Trending Videos

Coupons

Football

Search

Download App in Playstore

Download App

Best Collections

Technology

Essential has been nearly radio silent since reports surfaced late last month that Andy Rubin was looking for a buyer for his hardware startup. The company didn&t really confirm or deny the rumors — or say much else for that matter. A few weeks later, the company does have some news — but itnot what you were most likely expecting.

The startup just dropped the second modular accessory for its first smartphone. The Audio Adapter HD features a built-in amp and the ability to play back MQA (Master Quality Authenticated), a hi-res streaming audio technology. Oh, and therea 3.5mm audio jack, because everything thatold is new again.

The new add-on is set to drop at some point this summer. The company has also teamed up with Tidal. The streaming service has also reportedly had some issues of late, though, for its part, the company did offer a more outright denial. The partnership gives new and existing Essential customers a three-month trial subscription of TidalHiFi service — a taste of what they&ve been missing with their low bit rates.

The company has declined to provide pricing for the add-on. Its first mod, the 360-degree camera, retailed for $200 at launch, though that price has since dropped considerably on retailers like Amazon — much like the Essential phone itself. As with the camera, the Audio Adapter HD feels like a niche, compared to mods from Motorola, which include things like battery packs and speakers.

At the very least, however, it does show that therestill some life left in the Essential line.

- Details

- Category: Technology

Read more: Essential is releasing a wired headphone jack accessory

Write comment (90 Comments)Julia Beizer joined Bloomberg Media Group as its first chief product officer in January — and since then, she said, &Audio has been a big part of my world.&

Specifically, Beizerteam has been releasing products for different smart speakers, including AppleHomePod, AmazonEcho Show and, most recently, Google Home, with the launch of the First Word news briefing for both Google Home and the Google Assistant app. Bloomberg has also turned its video news network TicToc (initially created for Twitter) into an audio podcast. And by leveraging Amazon Polly for text-to-audio conversion, the company now offers audio versions of every article on the Bloomberg website and app.

Beizer joined Bloomberg from The Huffington Post (which, like TechCrunch, is owned by Verizondigital subsidiary Oath). She pointed out that these new initiatives represent a range of different approaches to audio news, from the &beautiful, bespoke, handcrafted audio projects& that you can create via podcasts, to an automated solution like text-to-speech that allows Bloomberg to offer audio in a more scalable way.

&What that really represents is utility,& said Beizer, &We want to fit into our consumers& lives in different ways.&

She added that since text-to-speech launched at the beginning of May, her team has found that &the people who use it, use it a lot,& listening to two to three articles per session on average.

And beyond the success of individual products, Beizer suggested that these audio initiatives represent a new &culture of experimentation.&

&Newsrooms historically thought a lot about what we have to offer to the world,& Beizer said. &Thata mindset thatreally built for the world when people had morning newspaper habits or watched the 6pm newscast every night. For us to be relevant in consumers& lives, we have to adapt to how they are consuming media.&

That means trying out new things, and it also means shutting them down if they&re not working.

&I often say: Launching things is my favorite thing to do, and killing things is my second favorite thing to do,& she said. So itpossible that some of these audio products won&t exist in a year, though she also argued, &Audio writ large — specific initiatives aside — is something I believe is a trend that isn&t go away.&

Not that Beizer is spending all her time on audio. She acknowledged that the &pivot to video& has become a punchline in digital media, but she said that as she looks ahead, she still wants to find new ways to repackage and promote BloombergTV content for an online audience. She also said that the sitenew paywall represents &a huge opportunity.&

&We&re completely rethinking how we deliver our content — we want it to be essential to users& lives,& she said. &That ties directly into subscription. I&ve worked in subscription before, and it gives you real clarity about your user and your audience.&

- Details

- Category: Technology

Read more: Bloomberg Media Group's primary item officer sees huge opportunities in audio

Write comment (93 Comments)If AI innovation runs on data, the new European UnionGeneral Data Protection Regulations (GDPR) seem poised to freeze AI advancement. The regulations prescribe a utopian data future where consumers can refuse companies access to their personally identifiable information (PII). Although the enforcement deadline has passed, the technical infrastructure and manpower needed to meet these requirements still do not exist in most companies today.

Coincidentally, the barriers to GDPR compliance are also bottlenecks of widespread AI adoption. Despite the hype, enterprise AI is still nascent: Companies may own petabytes of data that can be used for AI, but fully digitizing that data, knowing what the data tables actually contain and understanding who, where and how to access that data remains a herculean coordination effort for even the most empowered internal champion. Itno wonder that many scrappy AI startups find themselves bogged down by customer data cleanup and custom integrations.

As multinationals and Big Tech overhaul their data management processes and tech stack to comply with GDPR, herehow AI and data innovation counterintuitively also stand to benefit.

How GDPR impacts AI

GDPR covers the collection, processing and movement of data that can be used to identify a person, such as a name, email address, bank account information, social media posts, health information and more, all of which are currently used to power the AI algorithms ranging from targeting ads to identifying terrorist cells.

The penalty for noncompliance is 4 percent of global revenue, or €20 million, whichever is higher. To put that in perspective: 4 percent of Amazon2017 revenue is $7.2 billion, Googleis $4.4 billion and Facebookis $1.6 billion. These regulations apply to any citizen of the EU, no matter their current residence, as well as vendors upstream and downstream of the companies that collect PII.

Article 22 of the GDPR, titled &Automated Individual Decision-making, including Profiling,& prescribes that AI cannot be used as the sole decision-maker in choices that have legal or similarly significant effects on users. In practice, this means an AI model cannot be the only step for deciding whether a borrower can receive a loan; the customer must be able to request that a human review the application.

One way to avoid the cost of compliance, which includes hiring a data protection officer and building access controls, is to stop collecting data on EU residents altogether. This would bring PII-dependent AI innovation in the EU to a grinding halt. With the EU representing about 16 percent of global GDP, 11 percent of global online advertising spend and 9 percent of the global population in 2017, however, Big Tech will more likely invest heavily in solutions that will allow them to continue operating in this market.

Transparency mandates force better data accessibility

GDPR mandates that companies collecting consumer data must enable individuals to know what data is being collected about them, understand how it is being used, revoke permission to use specific data, correct or update data and obtain proof that the data has been erased if the customer requests it. To meet these potential requests, companies must shift from indiscriminately collecting data in a piecemeal and decentralized manner to establishing an organized process with a clear chain of control.

Any data that companies collect must be immediately classified as either PII or de-identified and assigned the correct level of protection. Its location in the companydatabases must be traceable with an auditable trail: GDPR mandates that organizations handling PII must be able to find all copies of regulated data, regardless of how and where it is stored. These organizations will need to assign someone to manage their data infrastructure and fulfill these user privacy requests.

Unproven upside alone has always been insufficient to motivate cross-functional modernization.

Having these data infrastructure and management processes in place will greatly lower the companybarriers to deploying AI. By fully understanding their data assets, the company can plan strategically about where they can deploy AI in the near-term using their existing data assets. Moreover, once they build an AI road map, the company can determine where they need to obtain additional data to build more complex and valuable AI algorithms. With the data streams simplified, storage mapped out and a chain of ownership established, the company can more effectively engage with AI vendors to deploy their solutions enterprise-wide.

More importantly, GDPR will force many companies dragging their feet on digitization to finally bite the bullet. The mandates require that data be portable: Companies must provide a way for users to download all of the data collected about them in a standard format. Currently, only 10 percent of all data is collected in a format for easing analysis and sharing, and more than 80 percent of enterprise data today is unstructured, according to Gartner estimates.

Much of this structuring and information extraction will initially have to be done manually, but Big Tech companies and many startups are developing tools to accelerate this process. According to PWC, the sectors most behind on digitization are healthcare, government and hospitality, all of which handle large amounts of unstructured data containing PII — we could expect to see a flood of AI innovation in these categories as the data become easier to access and use.

Consumer opt-outs require more granular AI model management

Under GDPR guidelines, companies must let users prevent the company from storing certain information about them. If the user requests that the company permanently and completely delete all the data about them, the company must comply and show proof of deletion. How this mandate might apply to an AI algorithm trained on data that a user wants to delete is not specifically prescribed and awaits its first test case.

Today, data is pooled together to train an AI algorithm. It is unclear how an AI engineer would attribute the impact of a particular data point to the overall performance of the algorithm. If the enforcers of GDPR decide that the company must erase the effect of a unit of data on the AI model in addition to deleting the data, companies using AI must find ways to granularly explain how a model works and fine tune the model to &forget& that data in question. Many AI models are black boxes today, and leading AI researchers are working to enable model explainability and tunability. The GDPR deletion mandate could accelerate progress in these areas.

In this post-GDPR future, companies no longer have to infer intent from expensive schemes to sneakily capture customer information.

In the nearer term, these GDPR mandates could shape best practices for UX and AI model design. Today, GDPR-compliant companies offer users the binary choice of allowing full, effectively unrestricted use of their data or no access at all. In the future, product designers may want to build more granular data access permissions.

For example, before choosing to delete Facebook altogether, a user can refuse companies access to specific sets of information, such as their network of friends or their location data. AI engineers anticipating the need to trace the effect of specific data on a model may choose to build a series of simple models optimizing on single dimensions, instead of one monolithic and very complex model. This approach may have performance trade-offs, but would make model management more tractable.

Building trust for more data tomorrow

The new regulations require companies to protect PII with a level of security previously limited to patient health and consumer finance data. Nearly half of all companies recently surveyed by Experian about GDPR are adopting technology to detect and report data breaches as soon as they occur. As companies adopt more sophisticated data infrastructure, they will be able to determine who has and should have access to each data stream and manage permissions accordingly. Moreover, the company may also choose to build tools that immediately notify users if their information was accessed by an unauthorized party; Facebook offers a similar service to its employees, called a &Sauron alert.&

Although the restrictions may appear to reduce tech companies& ability to access data in the short-term, 61 percent of companies see additional benefits of GDPR-readiness beyond penalty avoidance, according to a recent Deloitte report. Taking these precautions to earn customer trust may eventually lower the cost of acquiring high-quality, highly dimensional data.

In this post-GDPR future, companies no longer have to infer intent from expensive schemes to sneakily capture customer information. Improved data infrastructure will have enabled early AI applications to demonstrate their value, encouraging more customers to voluntarily share even more information about themselves to trustworthy companies.

Unproven upside alone has always been insufficient to motivate cross-functional modernization, but the threat of a multi-billion-dollar penalty may finally spur these companies to action. More importantly, GDPR is but the first of much more data privacy regulation to come, and many countries across the world look to it as a model for their own upcoming policies. As companies worldwide lay the groundwork for compliance and transparency, they&re also paving the way to an even more vibrant AI future to come.

- Details

- Category: Technology

Read more: GDPR panic may spur data and AI innovation

Write comment (100 Comments)In May, Facebook teased a new feature called 3D photos, and itjust what it sounds like. However, beyond a short video and the name, little was said about it. But the companycomputational photography team has just published the research behind how the feature works and, having tried it myself, I can attest that the results are really quite compelling.

In case you missed the teaser, 3D photos will live in your news feed just like any other photos, except when you scroll by them, touch or click them, or tilt your phone, they respond as if the photo is actually a window into a tiny diorama, with corresponding changes in perspective. It will work for both ordinary pictures of people and dogs, but also landscapes and panoramas.

It sounds a little hokey, and I&m about as skeptical as they come, but the effect won me over quite quickly. The illusion of depth is very convincing, and it does feel like a little magic window looking into a time and place rather than some 3D model — which, of course, it is. Herewhat it looks like in action:

I talked about the method of creating these little experiences with Johannes Kopf, a research scientist at FacebookSeattle office, where its Camera and computational photography departments are based. Kopf is co-author (with University College LondonPeter Hedman) of the paper describing the methods by which the depth-enhanced imagery is created; they will present it at SIGGRAPH in August.

I talked about the method of creating these little experiences with Johannes Kopf, a research scientist at FacebookSeattle office, where its Camera and computational photography departments are based. Kopf is co-author (with University College LondonPeter Hedman) of the paper describing the methods by which the depth-enhanced imagery is created; they will present it at SIGGRAPH in August.

Interestingly, the origin of 3D photos wasn&t an idea for how to enhance snapshots, but rather how to democratize the creation of VR content. Itall synthetic, Kopf pointed out. And no casual Facebook user has the tools or inclination to build 3D models and populate a virtual space.

One exception to that is panoramic and 360 imagery, which is usually wide enough that it can be effectively explored via VR. But the experience is little better than looking at the picture printed on butcher paper floating a few feet away. Not exactly transformative. Whatlacking is any sense of depth — so Kopf decided to add it.

The first version I saw had users moving their ordinary cameras in a pattern capturing a whole scene; by careful analysis of parallax (essentially how objects at different distances shift different amounts when the camera moves) and phone motion, that scene could be reconstructed very nicely in 3D (complete with normal maps, if you know what those are).

But inferring depth data from a single camerarapid-fire images is a CPU-hungry process and, though effective in a way, also rather dated as a technique. Especially when many modern cameras actually have two cameras, like a tiny pair of eyes. And it is dual-camera phones that will be able to create 3D photos (though there are plans to bring the feature downmarket).

By capturing images with both cameras at the same time, parallax differences can be observed even for objects in motion. And because the device is in the exact same position for both shots, the depth data is far less noisy, involving less number-crunching to get into usable shape.

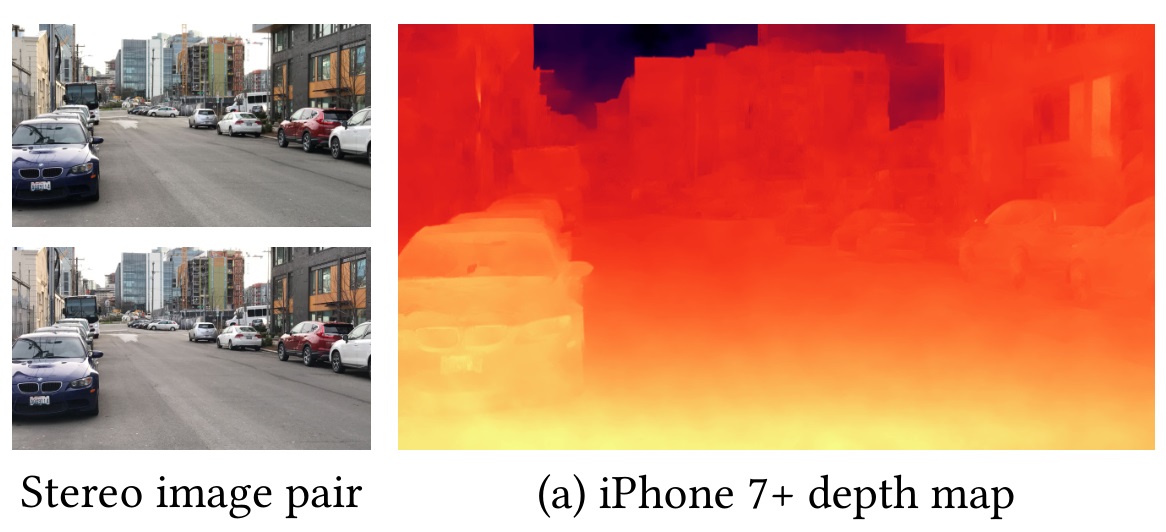

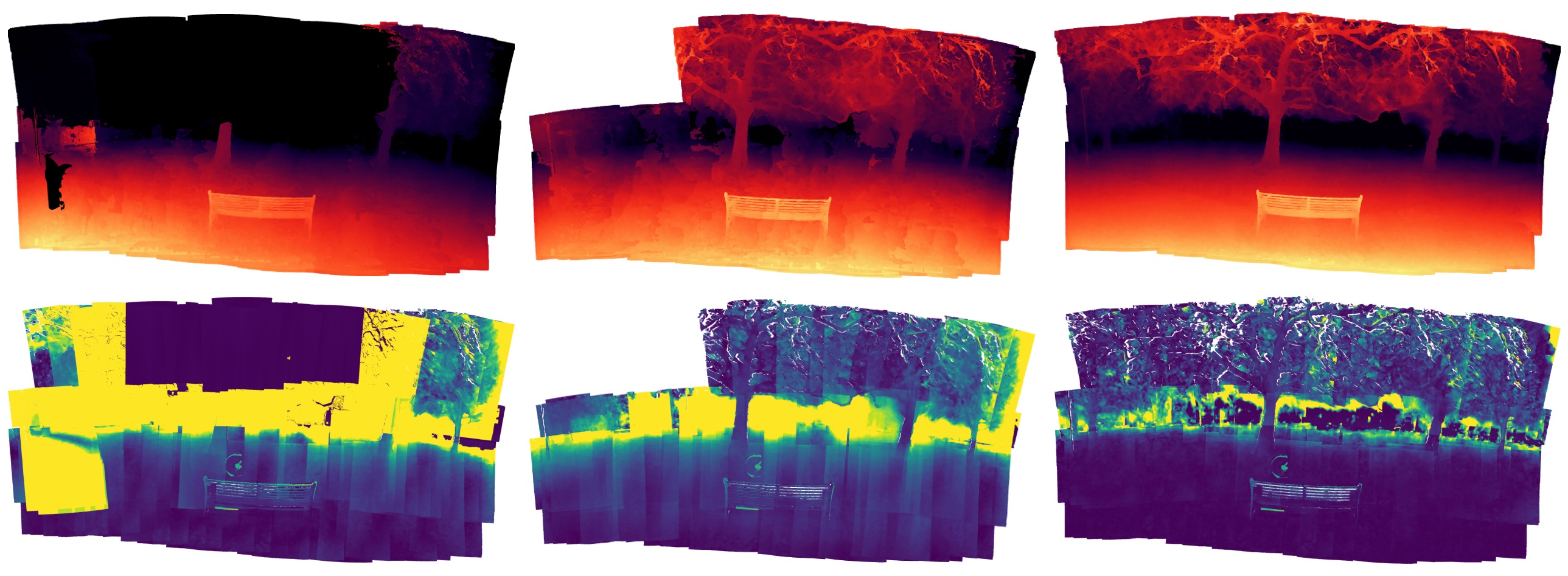

Herehow it works. The phonetwo cameras take a pair of images, and immediately the device does its own work to calculate a &depth map& from them, an image encoding the calculated distance of everything in the frame. The result looks something like this:

Apple, Samsung, Huawei, Google — they all have their own methods for doing this baked into their phones, though so far itmainly been used to create artificial background blur.

Apple, Samsung, Huawei, Google — they all have their own methods for doing this baked into their phones, though so far itmainly been used to create artificial background blur.

The problem with that is that the depth map created doesn&t have some kind of absolute scale — for example, light yellow doesn&t mean 10 feet, while dark red means 100 feet. An image taken a few feet to the left with a person in it might have yellow indicating 1 foot and red meaning 10. The scale is different for every photo, which means if you take more than one, let alone dozens or a hundred, therelittle consistent indication of how far away a given object actually is, which makes stitching them together realistically a pain.

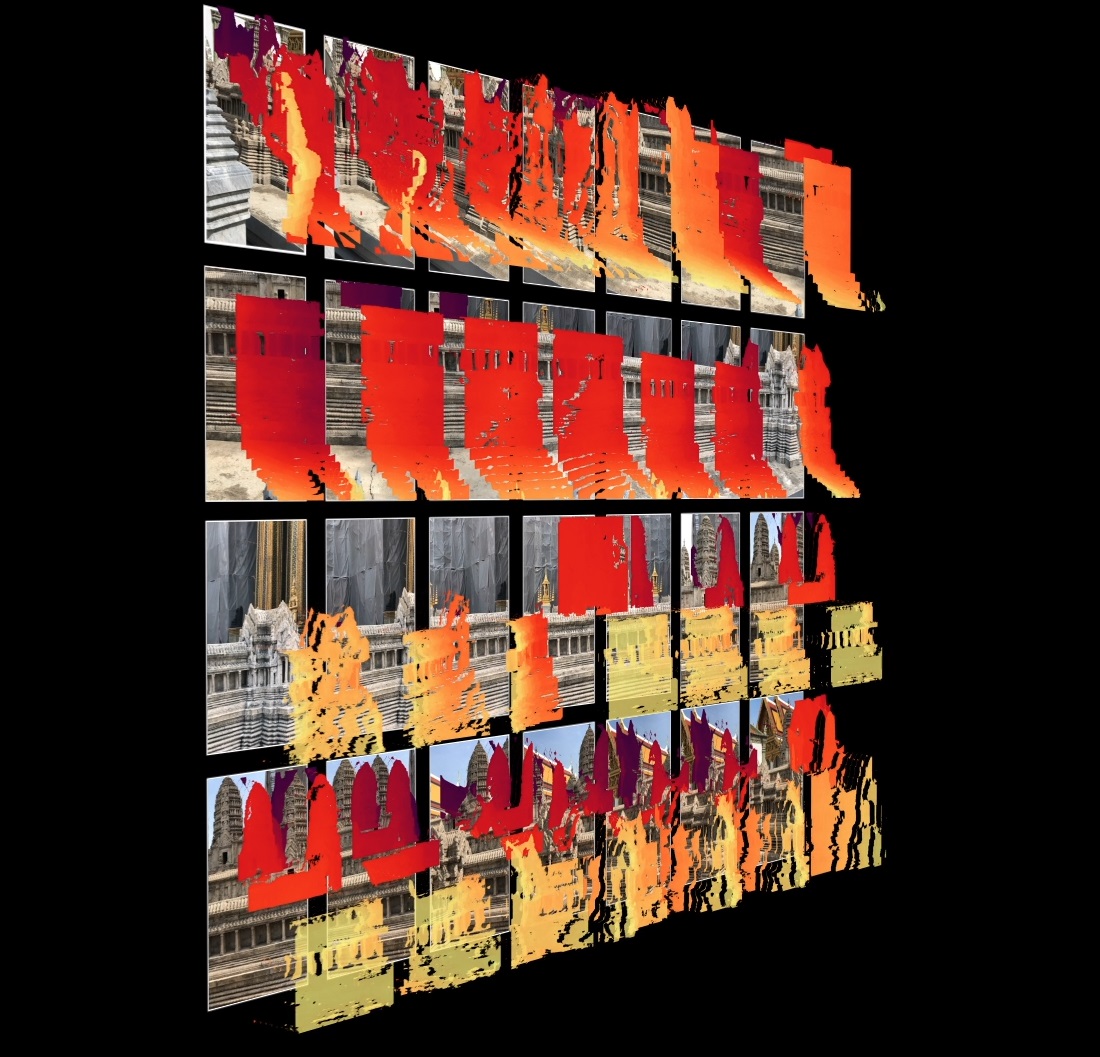

Thatthe problem Kopf and Hedman and their colleagues took on. In their system, the user takes multiple images of their surroundings by moving their phone around; it captures an image (technically two images and a resulting depth map) every second and starts adding it to its collection.

Thatthe problem Kopf and Hedman and their colleagues took on. In their system, the user takes multiple images of their surroundings by moving their phone around; it captures an image (technically two images and a resulting depth map) every second and starts adding it to its collection.

In the background, an algorithm looks at both the depth maps and the tiny movements of the camera captured by the phonemotion detection systems. Then the depth maps are essentially massaged into the correct shape to line up with their neighbors. This part is impossible for me to explain because itthe secret mathematical sauce that the researchers cooked up. If you&re curious and like Greek, click here.

Not only does this create a smooth and accurate depth map across multiple exposures, but it does so really quickly: about a second per image, which is why the tool they created shoots at that rate, and why they call the paper &Instant 3D Photography.&

Next, the actual images are stitched together, the way a panorama normally would be. But by utilizing the new and improved depth map, this process can be expedited and reduced in difficulty by, they claim, around an order of magnitude.

Because different images captured depth differently, aligning them can be difficult, as the left and center examples show — many parts will be excluded or produce incorrect depth data. The one on the right is Facebookmethod.

Then the depth maps are turned into 3D meshes (a sort of two-dimensional model or shell) — think of it like a papier-mache version of the landscape. But then the mesh is examined for obvious edges, such as a railing in the foreground occluding the landscape in the background, and &torn& along these edges. This spaces out the various objects so they appear to be at their various depths, and move with changes in perspective as if they are.

Although this effectively creates the diorama effect I described at first, you may have guessed that the foreground would appear to be little more than a paper cutout, since, if it were a personface captured from straight on, there would be no information about the sides or back of their head.

This is where the final step comes in of &hallucinating& the remainder of the image via a convolutional neural network. Ita bit like a content-aware fill, guessing on what goes where by whatnearby. If therehair, well, that hair probably continues along. And if ita skin tone, it probably continues too. So it convincingly recreates those textures along an estimation of how the object might be shaped, closing the gap so that when you change perspective slightly, it appears that you&re really looking &around& the object.

This is where the final step comes in of &hallucinating& the remainder of the image via a convolutional neural network. Ita bit like a content-aware fill, guessing on what goes where by whatnearby. If therehair, well, that hair probably continues along. And if ita skin tone, it probably continues too. So it convincingly recreates those textures along an estimation of how the object might be shaped, closing the gap so that when you change perspective slightly, it appears that you&re really looking &around& the object.

The end result is an image that responds realistically to changes in perspective, making it viewable in VR or as a diorama-type 3D photo in the news feed.

In practice it doesn&t require anyone to do anything different, like download a plug-in or learn a new gesture. Scrolling past these photos changes the perspective slightly, alerting people to their presence, and from there all the interactions feel natural. It isn&t perfect — there are artifacts and weirdness in the stitched images if you look closely, and of course mileage varies on the hallucinated content — but it is fun and engaging, which is much more important.

The plan is to roll out the feature mid-summer. For now, the creation of 3D photos will be limited to devices with two cameras — thata limitation of the technique — but anyone will be able to view them.

But the paper does also address the possibility of single-camera creation by way of another convolutional neural network. The results, only briefly touched on, are not as good as the dual-camera systems, but still respectable and better and faster than some other methods currently in use. So those of us still living in the dark age of single cameras have something to hope for.

- Details

- Category: Technology

Read more: How Facebook’s new 3D photos work

Write comment (93 Comments)Google has published a set of fuzzy but otherwise admirable &AI principles& explaining the ways it will and won&t deploy its considerable clout in the domain. &These are not theoretical concepts; they are concrete standards that will actively govern our research and product development and will impact our business decisions,& wrote CEO Sundar Pichai.

The principles follow several months of low-level controversy surrounding Project Maven, a contract with the U.S. military that involved image analysis on drone footage. Some employees had opposed the work and even quit in protest, but really the issue was a microcosm for anxiety regarding AI at large and how it can and should be employed.

Consistent with Pichaiassertion that the principles are binding, Google Cloud CEO Diane Green confirmed today in another post what was rumored last week, namely that the contract in question will not be renewed or followed with others. Left unaddressed are reports that Google was using Project Maven as a means to achieve the security clearance required for more lucrative and sensitive government contracts.

The principles themselves are as follows, with relevant portions quoted from their descriptions:

- Be socially beneficial: Take into account a broad range of social and economic factors, and proceed where we believe that the overall likely benefits substantially exceed the foreseeable risks and downsides…while continuing to respect cultural, social, and legal norms in the countries where we operate.

- Avoid creating or reinforcing unfair bias: Avoid unjust impacts on people, particularly those related to sensitive characteristics such as race, ethnicity, gender, nationality, income, sexual orientation, ability, and political or religious belief.

- Be built and tested for safety: Apply strong safety and security practices to avoid unintended results that create risks of harm.

- Be accountable to people: Provide appropriate opportunities for feedback, relevant explanations, and appeal.

- Incorporate privacy design principles: Give opportunity for notice and consent, encourage architectures with privacy safeguards, and provide appropriate transparency and control over the use of data.

- Uphold high standards of scientific excellence: Work with a range of stakeholders to promote thoughtful leadership in this area, drawing on scientifically rigorous and multidisciplinary approaches…responsibly share AI knowledge by publishing educational materials, best practices, and research that enable more people to develop useful AI applications.

- Be made available for uses that accord with these principles: Limit potentially harmful or abusive applications. (Scale, uniqueness, primary purpose, and Googlerole to be factors in evaluating this.)

In addition to stating what the company will do, Pichai also outlines what it won&t do. Specifically, Google will not pursue or deploy AI in the following areas:

- Technologies that cause or are likely to cause overall harm. (Subject to risk/benefit analysis.)

- Weapons or other technologies whose principal purpose or implementation is to cause or directly facilitate injury to people.

- Technologies that gather or use information for surveillance violating internationally accepted norms.

- Technologies whose purpose contravenes widely accepted principles of international law and human rights.

(No mention of being evil.)

In the seven principles and their descriptions, Google leaves itself considerable leeway with the liberal application of words like &appropriate.& When is an &appropriate& opportunity for feedback What is &appropriate& human direction and control How about &appropriate& safety constraints

Itarguable that it is too much to expect hard rules along these lines on such short notice, but I would argue that it is not in fact short notice; Google has been a leader in AI for years and has had a great deal of time to establish more than principles.

For instance, its promise to &respect cultural, social, and legal norms& has surely been tested in many ways. Where can we see when practices have been applied in spite of those norms, or where Google policy has bent to accommodate the demands of a government or religious authority

For instance, its promise to &respect cultural, social, and legal norms& has surely been tested in many ways. Where can we see when practices have been applied in spite of those norms, or where Google policy has bent to accommodate the demands of a government or religious authority

And in the promise to avoid creating bias and be accountable to people, surely (based on Googleexisting work here) there is something specific to say For instance, if any Google-involved system has outcomes based on sensitive data or categories, the system will be fully auditable and available for public attention

The ideas here are praiseworthy, but AIapplications are not abstract; these systems are being used today to determine deployments of police forces, or choose a rate for home loans, or analyze medical data. Real rules are needed, and if Google really intends to keep its place as a leader in the field, it must establish them, or, if they are already established, publish them prominently.

In the end it may be the shorter list of things Google won&t do that prove more restrictive. Although use of &appropriate& in the principles allows the company space for interpretation, the opposite case is true for its definitions of forbidden pursuits. The definitions are highly indeterminate, and broad interpretations by watchdogs of phrases like &likely to cause overall harm& or &internationally accepted norms& may result in Googleown rules being unexpectedly prohibitive.

&We acknowledge that this area is dynamic and evolving, and we will approach our work with humility, a commitment to internal and external engagement, and a willingness to adapt our approach as we learn over time,& wrote Pichai. We will soon see the extent of that willingness.

- Details

- Category: Technology

Read more: Google’s new ‘AI principles’ forbid its use in weapons and human rights violations

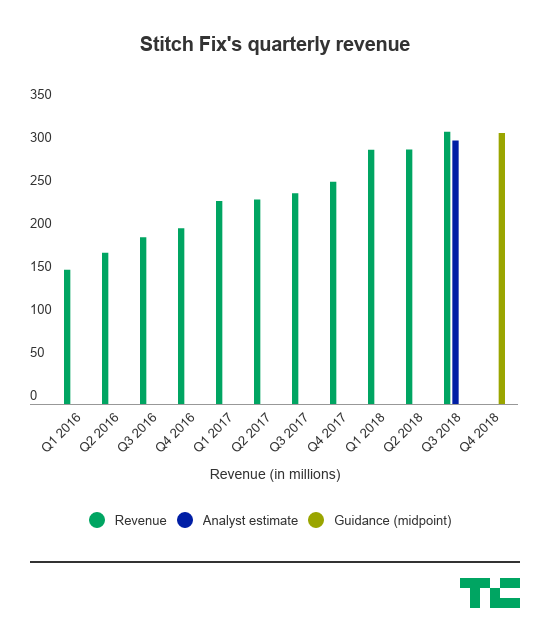

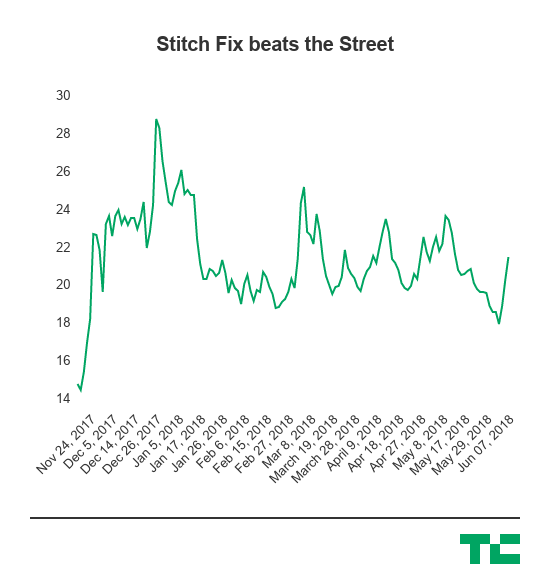

Write comment (96 Comments)Stitch Fix, one of last yearhigh-profile IPOs, has had a bumpy ride for the past few quarters — but it blew out expectations this afternoon for its most recent quarter, and the stock went absolutely nuts.

Therealso a ton of news coming out from the company today, including the hire of a new chief marketing officer as well as the launch of Stitch Fix Kids. All this is pretty good timing because the company appears to be cramming everything into one announcement that is serving as a very pleasant surprise to Wall Street, which is looking for as many signals as it can get that the subscription e-commerce company will end up as one of the more successful IPO stories. Shares of the company are up more than 14 percent after the release came out, where the company beat out expectations that Wall Street set across the board — which, while not the best barometer, serves as a somewhat public barometer as well as what helps determine whether or not it can lock up the best talent.

However, following the announcement, Stitch Fixstock came back down to Earth and is up around 4 percent.

Herethe final line for the company:

- Q3 Revenue: $316.7 million, compared to $306.4 million in estimates from Wall Street and up 29 percent year-over-year.

- Q3 Earnings: 9 cents per share, compared to 3 cents per share in estimates from Wall Street.

- Q4 Revenue Guidance:$310 million to $320 million, compared to Wall Street estimates of around $314 million.

- Cost of goods sold: $178.5 million, up from $139.7 million in Q3 last year.

- Gross Margin: 43.6 percent, up from 43 percent in Q3 last year.

- Advertising spend: $25.2 million, up from$21.3 million in Q3 last year.

- Active clients: 2.7 million, up 30 percent year-over-year (2.5 million last quarter).

- Q3 Net income: $9.5 million ($12.4 million in adjusted EBITDA).

Stitch Fix Kids will carry sizes 2T to 14, which will be across a diverse range of aesthetics &to give kids the freedom to express themselves in clothing that they feel great wearing,& the company said. Those Fixes will include 8 to 12 items that include market and exclusive brands. Stitch Fix launched Stitch Fix Plus in February last year.

&Our new Stitch Fix Kids offering is a testament to the scalability of our platform,& CEO and founder Katrina Lake said in a statement accompanying the release. &We&re excited for Stitch Fix to style everyone in the family and to create an effortless way for parents to shop for themselves and their children. Our goal is to provide unique, affordable kids clothing in a wide range of styles, giving our littlest clients the freedom to express themselves in clothing that they love and feel great wearing.&

Stitch Fix was widely considered a successful IPO last year, though it faced some challenges over the course of the front of the year. But as itexpanded into new lines of subscriptions, its customer base still clearly continues to grow, and the company is still finding newer areas to expand — including the upcoming launch of Kids that it announced today. Like many recent IPOs, Wall Street is likely going to look for continued growth in terms of its core business (meaning, subscribers), but Stitch Fix is showing that itable to not set cash on fire as fresh IPOs sometimes do.

Stitch Fixnew CMO,Deirdre Findlay, comes to the company from Google, where she oversaw the Google Home hardware products, which included Home and Chromecast. Prior to that, Findlay has a pretty extensive history in marketing across a wide variety of verticals beyond just tech, including working with Whirlpool Brands, Allstate Insurance, MillerCoors and Kaiser Permanente, the company said. While Stitch Fix is a digitally native company, itnot exactly an explicit tech company and requires expertise outside of the realm of just the typical tech marketing talent — so getting someone with a pretty robust background like that would be important as it continues to expand into new areas of growth.

- Details

- Category: Technology

Page 5131 of 5614

18

18