Music

Trailers

DailyVideos

India

Pakistan

Afghanistan

Bangladesh

Srilanka

Nepal

Thailand

Iraq

Iran

Russia

Brazil

StockMarket

Business

CryptoCurrency

Technology

Startup

Trending Videos

Coupons

Football

Search

Download App in Playstore

Download App

Best Collections

Technology

Diversity and inclusion is a trash fire in Silicon Valley and in the business world at large. But letjust focus on tech for now. At the Code Conference this evening, All Raise and Cowboy Ventures Partner AileenLee, shift7 CEO Megan Smith and Stubhub President Sukhinder Singh Cassidy talked about the state of diversity and inclusion in tech. Lee kicked things off with how the idea and statement that someone is such a &good guy& bothers her.

Often times, she said, thatthe qualification for how many of these men get the opportunity to invest in companies or work at certain companies. Meanwhile, if someone suggests a woman or person of color, Lee said, the questions are totally different and focused on qualifications.

&Good guys have hired and funded good guys,& Lee said.

Moving forward, &we need to systematically map out our industry and business processes and try to take the biases out of them,& Lee said. She added, &people have not been given a fair shot and we need to kind of re-engineer our business.&

&Last year it was like every month there was a new story where you just could no longer ignore it,& Lee said. &We have a lot of work to do but I&m pretty optimistic.&

She pointed to how shesat at a board meeting where the male CEO pointed out, unprompted, that he sees the company is all male and is at risk of becoming a company no one would want to work for.

The panel also touched on the importance of diversity at the board level and some backlash. For example, some firms have suggested men don&t have one-on-one meetings with women. But Lee says, &we&re definitely not going to solve this problem by men saying they&re afraid to meet with women.&

Toward the end of the panel, Smith pointed out that &the people who are most left out are women of color.&

While there were women of color on stage at the Code Conference this week, Smithassertion was especially notable given the absence of black men and women.

- Details

- Category: Technology

Read more: Cowboy Ventures’ Aileen Lee says enough with favoring the ‘good guys’

Write comment (95 Comments)This super creepy Tom Waits number pops into my head every time I read about another Apple content acquisition. For a billion-dollar project from one of the worldbiggest companies, the companyupcoming streaming service is shaping up to be a strange collection of original content.

Of course, I&m not really sure what I expected after Apple unleashed Carpool Karaoke and Planet of the Apps on the world. Neither were the kind of thing that imbues you with confidence in a companyprogramming choices.

I wrote a review of sorts of the former here, but was willing to give the show the benefit of the doubt that it just wasn&t for me, like cilantro, cats or late-era Radiohead. But clearly I wasn&t alone on this one. And Planet of the Apps — the less said about that one the better, probably. Neither particularly jibe with Eddy Cuewhole, &We&re not after quantity, we&re after quality& spiel.

Announcements have picked up considerably, even in the few months following that appearance at SXSW, butApplegot a lot of catching up to do against content juggernauts like Netflix, Hulu and even Amazon. Of course, the companygot a long, proud history of showing up a bit late to the party and still blowing the competition out of the water in the hardware space.

And while Apple Music is still far from overtaking Spotify, the music streaming service has been adding subscribers at a steady clip, courtesy of, among other things, being built directly into the companysoftware offerings — a fringe benefit that Apple eventual video streaming service will no doubt share. Ittrue, of course, that users are more likely to subscribe to multiple video streaming services than music ones, but the companygoing to have to offer more than ecosystem accessibility. At this point, however, ithard not to side with Fox CEO James Murdochcomments on the matter from earlier today.

&Going piece by piece, one by one, show by show, etc., is gonna take a long time to really move the dial and having something mega,& the exec told a crowd at the Code Conference. &I do think thatgonna be very challenging.&

And this first round of programming is a bit of a mixed bag. Among the current crop of offerings, Amazing Stories feels like close to a slam dunk, because if the combination of Spielberg and nostalgia can make Ready Player One a box office success story, then, well, surely it can work on anything, right

Perhaps itthe dribs and drabs with which the company has been revealing its content play over a matter of months. When Apple wanted to launch a streaming music service, the company went ahead and bought Beats in 2014. Sure, the headphone business was a nice bonus, but it was pretty clear from the outset that Beats Music was the real meat of that deal. A year later, Apple Music was unleashed on the world.

The latest rumors have the companyvideo streaming service&launching as early as March 2019.& That gives the company a little less than a year to really wow us with original content announcements, if it really wants to hit the ground running — assuming, of course, that many or most of the titles are already in production.

More likely, the company will ultimately ease into it. Apple Music, after all, didn&t exactly light the world on fire at launch, and Applegot no shortage or revenue streams at the moment, so it certainly won&t go bust if its billion-dollar investment fails to pay off overnight. But the competition is fierce for this one, extending beyond obvious competitors like Netflix and Hulu to longstanding networks like HBO, which are all vying to lock you in to monthly fees.

This battle won&t be easily won. The company has been mostly tight-lipped in all of this (as is its custom), but success is going to take a long-term commitment, with the understanding that it will most likely require a long runway to reap its own investment.

That projected $4 billion annual investment looks like a good place to start, but with Netflix planning to spend double that amount this year and Amazon potentially on target to pass it, Applein for a bloody and expensive fight.

- Details

- Category: Technology

Read more: Is Apple ready to take on Netflix

Write comment (99 Comments)The Office of Management and Budget reports that the federal government is a shambles — cybersecurity-wise, anyway. Finding little situational awareness, few standard processes for reporting or managing attacks and almost no agencies adequately performing even basic encryption, the OMB concluded that &the current situation is untenable.&

All told, nearly three quarters of federal agencies have cybersecurity programs that qualified as either &at risk& (significant gaps in security) or &high risk& (fundamental processes not in place).

The report, which you can read here, lists four major findings, each of which with its own pitiful statistics and recommendations that occasionally amount to a complete about-face or overhaul of existing policies.

1. &Agencies do not understand and do not have the resources to combat the current threat environment.&

The simple truth and perhaps origin of all these problems is that the federal government is a slow-moving beast that can&t keep up with the nimble threat of state-sponsored hackers and the rapid pace of technology. The simplest indicator of this problem is perhaps this: of the 30,899 (!) known successful compromises of federal systems in FY 2016, 11,802 of them never even had their threat vector identified.

38 percent of attacks had no identified method or attacker.

This lack of situational awareness means that even if they have budgets in the billions, these agencies don&t have the capability to deploy them effectively.

While cyber spending increases year-over-year, OMB found that agencies are not effectively using available information, such as threat intelligence, incident data, and network traffic flow data to determine the extent that assets are at risk, or inform how they to prioritize resource allocations.

To this end, the OMB will be working with agencies on a threat-based budget model, looking at what is actually possible to affect the agency, what is in place to prevent it and what specifically needs to be improved.

2. &Agencies do not have standardized cybersecurity processes and IT capabilities.&

Thereimmense variety in the tasks and capabilities of our many federal agencies, but you would think that some basics would have been established along the lines of best practices for reporting, standard security measures to lock down secure systems and so on. Nope!

For example, one agency lists no fewer than 62 separately managed email services in its environment, making it virtually impossible to track and inspect inbound and outbound communications across the agency.

51 percent of agencies can&t detect or whitelist software running on their systems

When something happens, things are little better: 59 percent of agencies have some kind of standard process for communicating cyber threats to their users. So, for example, if one of their 62 email systems has been compromised, the agency as likely as not has no good way to notify everyone about it.

And only 30 percent have &predictable, enterprise-wide incident response processes in place,& meaning once the threat has been detected, only one in three has some kind of standard procedure for who to tell and what to tell them.

Establishing standard processes for cybersecurity and general harmony in computing resources is something the OMB has been working on for a long time. Too bad the position of cyber coordinator just got eliminated.

3. &Agencies lack visibility into what is occurring on their networks, and especially lack the ability to detect data exfiltration.&

Monitoring your organizationdata and traffic, both internal and external, is a critical part of any cybersecurity plan. Time and again federal agencies have proven susceptible to all kinds of exfiltration schemes, from USB keys to phishing for login details.

73 percent can&t detect attempts to access large volumes of data.

Simply put, agencies cannot detect when large amounts of information leave their networks, which is particularly alarming in the wake of some of the high-profile incidents across government and industry in recent years.

Hard to secure your data if you can&t see where itgoing. After the &high-profile incidents& to which the OMB report alludes, one would think that detection and lockdown of data repositories would be one of the first efforts these agencies would make.

Perhaps itthe total lack of insight into how and why these things occur. Only 17 percent of agencies analyzed incident response data after the fact, so maybe they just filed the incidents away, never to be looked at again.

The OMB has a smart way to start addressing this: one agency that has its act together will be designated a &SOC [Secure Operations Center] Center of Excellence.& (Yes, &Center& is there twice.) This SOC will offer secure storage and access as a service to other agencies while the latter improve or establish their own facilities.

4. &Agencies lack standardized and enterprise-wide processes for managing cybersecurity risks&

Therea bit of overlap with 2 here, but redundancy is the name of the game when it comes to the U.S. government. This one is a bit more focused on the leadership itself.

While most agencies noted… that their leadership was actively engaged in cybersecurity risk management, many did not, or could not, elaborate in detail on leadership engagement above the CIO level.

Federal agencies possess neither robust risk management programs nor consistent methods for notifying leadership of cybersecurity risks across the agency.

84 percent of agencies failed to meet goals for encrypting data at rest.

Despite &repeated calls from industry leaders, GAO [the Government Accountability Office], and privacy advocates& to utilize encryption wherever possible, less than 16 percent of agencies achieved their targets for encrypting data at rest. Sixteen percent! Encrypting at rest isn&t even that hard!

Turns out this is an example of under-investment by the powers that be. Non-defense agencies budgeted a total between them of under $51 million on encrypting data in FY 2017, which is extremely little even before you consider that half of that came from two agencies. How are even motivated IT departments supposed to migrate to encrypted storage when they have no money to hire the experts or get the equipment necessary to do so

&Agencies have demonstrated that this is a low priority…it is easy to see governmentpriorities must be realigned,& the OMB remarked.

While the conclusion of the report isn&t as gloomy as the body, itclear that the OMBresearchers are deeply disappointed by what they found. This is hardly a new issue, despite the current presidentdesignation of it as a key issue — the previous presidents did as well, but movement has been slow and halting, punctuated by disastrous breaches and embarrassing leaks.

The report declines to name and shame the offending agencies, perhaps because their failings and successes were diverse and no one deserved worse treatment than another, but it seems highly likely that in less public channels those agencies are not being spared. Hopefully this damning report will put spurs to the efforts that have been limping along for the last decade.

- Details

- Category: Technology

Read more: Government investigation finds federal agencies failing at cybersecurity basics

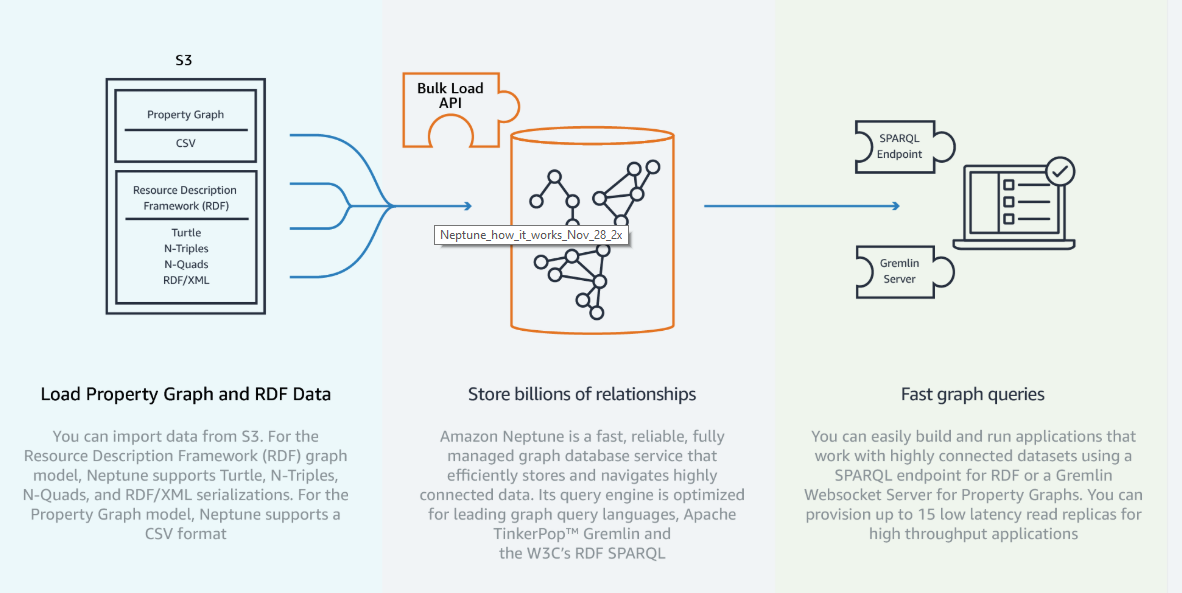

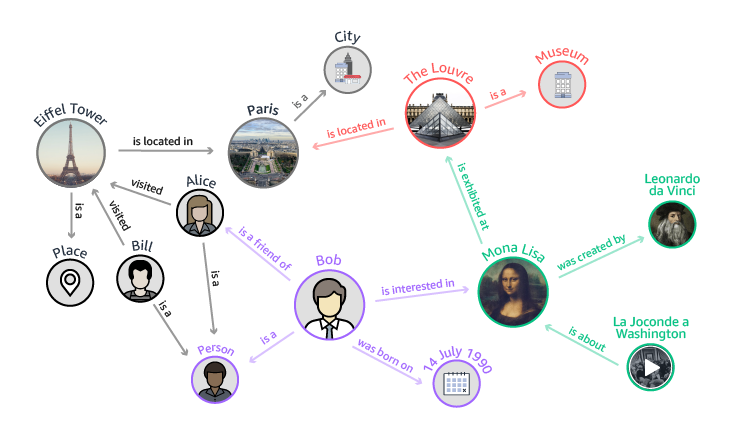

Write comment (95 Comments)AWS today announced that its Neptune graph database, which made its debut during the platformannual re:Invent conference last November, is now generally available. The launch of Neptune was one of the dozensof announcements the company made during its annual developer event, so you can be forgiven if you missed it.

Neptune supports graph APIs for both TinkerPop Gremlin and SPARQL, making it compatible with a variety of applications. AWS notes that it built the service to recover from failures within 30 seconds andpromises 99.99 percent availability.

&As the world has become more connected, applications that navigate large, connected data sets are increasingly more critical for customers,&said Raju Gulabani, vice president, Databases, Analytics, and Machine Learning atAWS. &We are delighted to give customers ahigh-performance graph database servicethat enables developers to query billions of relationships in milliseconds using standard APIs, making it easy to build and run applications that work with highly connected data sets.&

Standard use cases for Neptune are social networking applications, recommendation engines, fraud detection tools and networking applications that need to map the complex topology of an enterpriseinfrastructure.

Neptune already has a couple of high-profile users, including Samsung, AstraZeneca, Intuit, Siemens, Person, Thomson Reuters and Amazonown Alexa team.&Amazon Neptune is a key part of the toolkit we use to continually expand Alexaknowledge graph for our tens of millions of Alexa customers—itjust Day 1 and we&re excited to continue our work with the AWS team to deliver even better experiences for our customers,& said David Hardcastle, director of Amazon Alexa, in todayannouncement.

The service is now available in AWS&sU.S. East (N. Virginia), U.S. East (Ohio), U.S. West (Oregon) and EU (Ireland) regions, with others coming online in the future.

- Details

- Category: Technology

Read more: AWS’s Neptune graph database is now generally available

Write comment (98 Comments)Apple announced today that itplaced a straight-to-series order for Dickinson, a show that will star Hailee Steinfeldas poet Emily Dickinson.

Steinfeld is an actress and singer who was Oscar-nominated for her performance in True Grit and more recently performed in Pitch Perfect 2 and 3.Dickinson, meanwhile, is generally considered one of the great American poets, but given her reputation as an eccentric recluse, her life doesn&t seem to be the stuff of great drama (a recent biopic was called A Quiet Passion).

It sounds like this won&t be a standard biography, however — Dickinson is being billed as a coming-of-age story with a modern sensibility and tone.

The series will be written and executive produced by Alena Smith, who previously wrote for The Affair and The Newsroom. And it will be directed and executive produced by David Gordon Green, best known for directing comedies like Pineapple Express and episodes of HBOVice Principals (though healso directed non-comedic films like the recent biopic Stronger).

Dickinson joins a varied list of original series in development at Apple, ranging from a reboot of Steven SpielbergAmazing Stories, toa Reese Witherspoon- and Jennifer Anniston-starring series set in the world of morning TV and an adaptation of Isaac AsimovFoundation books. Apple reportedly plans to launch the first shows in this new lineup (presumably as part of a new subscription service) next March.

- Details

- Category: Technology

Read more: Apple is making a series about Emily Dickinson

Write comment (99 Comments)Nvidia launched a monster box yesterday called the HGX-2, and itthe stuff that geek dreams are made of. Ita cloud server that is purported to be so powerful it combines high-performance computing with artificial intelligence requirements in one exceptionally compelling package.

You know you want to know the specs, so letget to it: It starts with16x NVIDIA Tesla V100 GPUs. Thatgood for2 petaFLOPS for AI with low precision, 250 teraFLOPSfor medium precision and 125 teraFLOPS for those times when you need the highest precision. It comes standard with a 1/2 a terabyte of memory and 12 Nvidia NVSwitches, which enable GPU to GPU communications at 300 GB per second. They have doubled the capacity from the HGX-1 released last year.

Chart: Nvidia

Paresh Kharya, group product marketing manager for NvidiaTesla data center products, says this communication speed enables them to treat the GPUs essentially as a one giant, single GPU. &And what that allows [developers] to do is not just access that massive compute power, but also access that half a terabyte of GPU memory as a single memory block in their programs,& he explained.

Graphic: Nvidia

Unfortunately you won&t be able to buy one of these boxes. In fact, Nvidia is distributing them strictly to resellers, who will likely package these babies up and sell them to hyperscale data centers and cloud providers. The beauty of this approach for cloud resellers is that when they buy it, they have the entire range of precision in a single box, Kharya said.

&The benefit of the unified platform is as companies and cloud providers are building out their infrastructure, they can standardize on a single unified architecture that supports the entire range of high-performance workloads.So whether itAI, or whether ithigh-performance simulations, the entire range of workloads is now possible in just a single platform,&Kharya explained.

He points out this is particularly important in large-scale data centers. &In hyperscale companies or cloud providers, the main benefit that they&re providing is the economies of scale. If they can standardize on the fewestpossible architectures, they can really maximize the operational efficiency. And what HGX allows them to do is to standardize on that single unified platform,& he added.

As for developers, they can write programs that take advantage of the underlying technologies and program in the exact level of precision they require from a single box.

The HGX-2 powered servers will be available later this year from partner resellers, includingLenovo, QCT, Supermicro and Wiwynn.

- Details

- Category: Technology

Read more: Nvidia launches colossal HGX-2 cloud server to power HPC and AI

Write comment (97 Comments)Page 5220 of 5614

8

8