Music

Trailers

DailyVideos

India

Pakistan

Afghanistan

Bangladesh

Srilanka

Nepal

Thailand

StockMarket

Business

Technology

Startup

Trending Videos

Coupons

Football

Search

Download App in Playstore

Download App

Best Collections

Technology

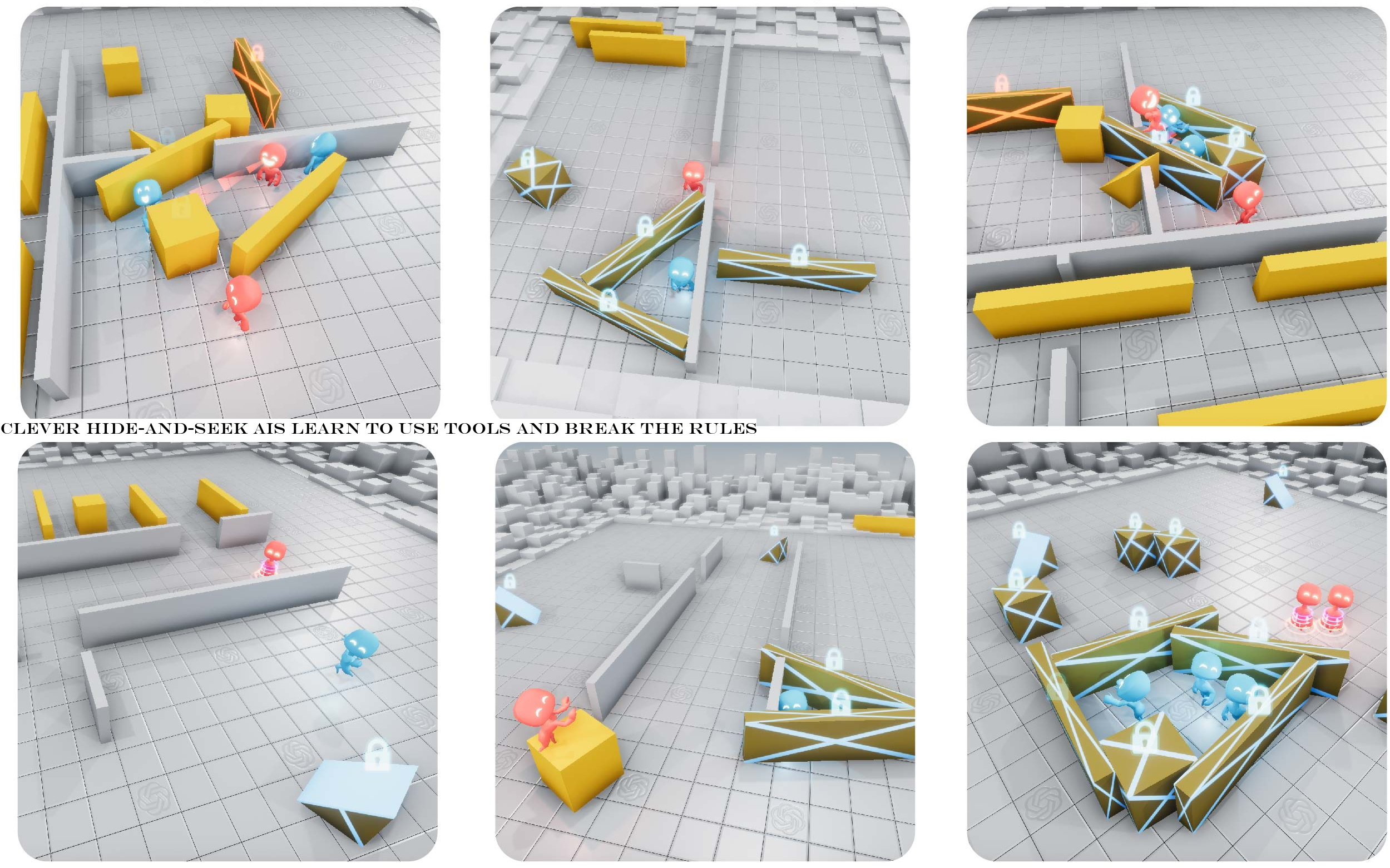

The latest research from OpenAI put its machine learning agents in a simple game of hide-and-seek, where they pursued an arms race of ingenuity, using objects in unexpected ways to achieve their goal of seeing or being seen. This type of self-taught AI could prove useful in the real world as well.

The study intended to, and successfully did look into the possibility of machine learning agents learning sophisticated, real-world-relevant techniques without any interference of suggestions from the researchers.

Tasks like identifying objects in photos or inventing plausible human faces are difficult and useful, but they don&t really reflect actions one might take in a real world. They&re highly intellectual, you might say, and as a consequence can be brought to a high level of effectiveness without ever leaving the computer.

Whereas attempting to train an AI to use a robotic arm to grip a cup and put it in a saucer is far more difficult than one might imagine (and has only been accomplished under very specific circumstances); the complexity of the real, physical world make purely intellectual, computer-bound learning of the tasks pretty much impossible.

At the same time, there are in-between tasks that do not necessarily reflect the real world completely, but still can be relevant to it. A simple one might be how to change a robotfacing when presented with multiple relevant objects or people. You don&t need a thousand physical trials to know it should rotate itself or the camera so it can see both, or switch between them, or whatever.

OpenAI hide-and-seek challenge to its baby ML agents was along these lines: A game environment with simple rules (called Polyworld) that nevertheless uses real-world-adjacent physics and inputs. If the AIs can teach themselves to navigate this simplified reality, perhaps they can transfer those skills, with some modification, to full-blown reality.

Such is the thinking behind the experiment, anyway, but itentertaining enough on its own. The game pits two teams against one another in a small 3D arena populated with a few randomly generated walls and objects. Several agents are spawned in it and the &hiders& are given a few seconds to familiarize themselves with the environment and hide. They can also interact with the objects, pushing them around and locking them in place. Once the &seeker& looks up they have a certain amount of time to spot the hiders.

All the machine learning program was informed of were the basic senses — each agent has a view of the world and a sort of &lidar& to tell them the positions of nearby objects — and the knowledge that they can move objects around. But beyond that they were simply given a point when they succeeded at their job, either seeking or hiding — thattheir motivation.

From these basic beginnings came wonderfully interesting results. At first the teams essentially moved randomly. But over millions of games the hiders found that by moving in a certain way — &crudely& running away — they could increase their points more often. And thus the arms race began.

Since the hiders were running away, the seekers began to develop more structured pursuit behaviors. But then came the tools.

Hiders found that they could push and pull objects on the field, and began constructing forts out of them.

The seekers responded by using ramps found on the field to ascend over the objects and crash the forts.

Hiders began looking for ramps while the seekers were still counting and either stealing them or locking them in place (the lock can&t be undone by an agent of the opposite team). Interestingly this involved a certain amount of division of labor, because there often wasn&t enough time for both hiders to go out in search of ramps; one had to prepare the fort while the other went on ramp patrol.

The OpenAI team thought maybe that was the end of it, but the seekers learned to exploit an unintended feature of the game world. They discovered that they could &push& a box while riding on top of it, meaning they could put it near a ramp, climb onto it, then &surf& it all over the field and find those naughty hiders.

Of course the hiders responded by locking every object they weren&t using to construct their fort — and that seems to be the end of the line as far as strategy in this game.

So whatthe point? As the authors of the paper explain, this is kind of the way we came bout.

The vast amount of complexity and diversity on Earth evolved due to co-evolution and competition between organisms, directed by natural selection. When a new successful strategy or mutation emerges, it changes the implicit task distribution neighboring agents need to solve and creates a new pressure for adaptation. These evolutionary arms races create implicit autocurricula whereby competing agents continually create new tasks for each other.

Inducing autocurricula in physically grounded and open-ended environments could eventually enable agents to acquire an unbounded number of human-relevant skills.

In other words, having AI models compete in an unsupervised manner may be a far better way to develop useful and robust skills than letting them toddle around on their own, racking up an abstract number like percentage of environment explored or the like.

Increasingly it is difficult or even impossible for humans to direct every aspect of an AIabilities by parameterizing it and controlling the interactions it has with the environment. For complex tasks like a robot navigating a crowded environment, there are so many factors that having humans design behaviors may never produce the kind of sophistication thatnecessary for these agents to take their place in everyday life.

But they can teach each other, as we&ve seen here and in GANs, where a pair of dueling AIs work to defeat the other in the creation or detection of realistic media. The OpenAI researchers posit that &multi-agent autocurricula,& or self-teaching agents, are the way forward in many circumstances where other methods are too slow or structured. They conclude:

&These results inspire confidence that in a more open-ended and diverse environment, multi-agent dynamics could lead to extremely complex and human-relevant behavior.&

Some parts of the research have been released as open source. You can read the full paper describing the experiment here.

- Details

- Category: Technology

Read more: Clever hide-and-seek AIs learn to use tools and break the rules

Write comment (90 Comments)Google today announced an update to how it handles videos in search results. Instead of just listing relevant videos on the search results page, Google will now also highlight the most relevant parts of longer videos, based on timestamps provided by the video creators. Thatespecially useful for how-to videos or documentaries.

&Videos aren&t skimmable like text, meaning it can be easy to overlook video content altogether,& Google Search product manager Prashant Baheti writes in todayannouncement. &Now, just like we&ve worked to make other types of information more easily accessible, we&re developing new ways to understand and organize video content in Search to make it more useful for you.&

In the search results, you will then be able to see direct links to the different parts of a video and a click on those, which will take you right into that part of the video.

To make this work, content creators first have to mark up their videos with bookmarks for the specific segments they want to highlight, no matter what platform they are on. Indeed, itworth stressing that this isn&t just a feature for YouTube creators. Google says italready working with video publishers like CBS Sports and NDTV, which will soon start marking up their videos.

I&m somewhat surprised that Google isn&t using its machine learning wizardry to mark up videos automatically. For now, the burden is on the video creator, and, given how much work simply creating a good video is, it remains to be seen how many of them will do so. On the other hand, though, it&ll give them a chance to highlight their work more prominently on Google Search, though Google doesn&t say whether the markup will have any influence on a videoranking on its search results pages.

- Details

- Category: Technology

Read more: Google starts highlighting key moments from videos in Search

Write comment (91 Comments)

Bharat Vasan is no longer the Chief Executive Officer at Pax Labs, the consumer tech company that makes cannabis vaporizers. A source familiar with the situation said that the board of directors made the decision to remove Vasan from the CEO role. His last day was Friday.

We&ve reached out to Vasan for comment. Pax is declining to elaborate on what drove its decision.

Certainly, ita surprising move, given that Vasan was appointed the CEO of Pax not so long ago — in February of 2018. Before that, he served as President and COO of August Home, which was acquired by Swedish lock maker Assa Abloy in 2017. Previous to that, Vasan was the cofounder of Basis, a fitness-based wearable company that was acquired by Intel in 2014 for $100 million.

Vasan also led the company in its most recent round this past April, in which it secured $420 million from Tiger Global Management, Tao Capital, and Prescott General Partners, among others. The post-money valuation for the company at the time was $1.7 billion.

Vasan is a veteran of consumer electronics, but Pax may be looking for a CEO that has more operational experience in cannabis.

After all, Pax is at an interesting intersection in its path, navigating an oft-changing regulatory landscape around cannabis. Moreover, the entire cannabis industry — and vaporizer industry — is under a microscope in the wake of hundreds of reports of vape-related lung illness. The CDC says that there have been 380 cases of lung illness reported across 36 states, with six deaths. Most patients reported a history of using e-cigarette products containing THC.

Pax is currently on the hunt for a new chief executive. In the meantime, its general counsel, Lisa Sergi, who joined the company at the end of July, will be its interim CEO and president.

Sergi had this to say in a prepared statement:

PAX is uniquely positioned as a leader in the burgeoning cannabis industry, with a talented team, an iconic brand, quality products and the balance sheet to achieve our ambitious goals and continued growth trajectory. I am extremely excited and honored to have been entrusted to lead this extraordinary company.

- Details

- Category: Technology

Read more: Pax Labs’ Bharat Vasan is out as CEO

Write comment (100 Comments)When it opened in 2006, AppleFifth Avenue flagship quickly became a top destination for New York City residents and tourists, alike. The big, glass cube was a radical departure from prior electronics stores, serving as the entrance to a 24-hour subterranean retail location. Location didn&t hurt either, with the company planting its flag across from the Plaza Hotel and Central Park and sharing a block with the iconic high-end toy store, FAO Schwarz.

Since early 2017, however, the store has been closed for renovations. Earlier this month, the company took the wraps off the outside of the cube (albeit with some multi-color reflective wrap still occupying the outside of the familiar retail landmark). Last week, the company offered more insight into the plan as retail SVP Deirdre O&Brien took to the stage during the iPhone 11 event to discuss the companyplans for the reinvented space.

During a discussion with TechCrunch, Apple shed even more light on the underground store, which will occupy the full area of the Fifth Avenue plaza. As is the case with all of Appleflagships, light is the thing here — though thateasier said than done when dealing with an underground space. Illuminating the store is done through a combination of natural lights and LEDs.

When the store reopens, a series of skylights flush on the ground of the plaza will be doing much of the heavy lifting for the lighting during the day. Each of those round portholes will be frosted to let the light in, while protecting the privacy of people walking above, with supplemental lighting from silver LED rings. That, in turn, is augmented by 18 (nine on each side of the cube) &sky lenses.& Oriented in two 3×3 configurations, the &sculptural furniture& will also provide seating in the outdoor plaza.

Of course, the natural lighting isn&t able to do all of the work for a 24-hour store. Thatcomplemented by a ceiling system that uses a similar stretched fabric-based lighting system as other Apple Stores. Here, however, the fabric will take on a more cloud-like structure with a more complicated geometrical shape than other Apple stores. The fabric houses tunable LED lights that react to the external environment. If itsunny outside, it will be brighter downstairs. When itcloudy, the lights will dim.

In all, there are five modes tuned to a 24-hour cycle, including:

- Sunrise: 3,000K

- Day: 4,500K-5,250K (depending on how bright it is outside)

- Sunset: 3,000K

- Evening: 3,250K

- Night: 3,500K

Sunrise and sunset are apparently the best time to check it out, as the lights glow warmly for about an hour or so. There are 80 ring lights in all, and around 500,000 LEDs, with about 2,500 LED spotlights used to illuminate tables and products inside the store. The natural lighting also will be used to keep alive eight trees and a green wall in the underground space.

The newly remodeled store opens at 8AM on September 20, just in time to line up for the new iPhone.

- Details

- Category: Technology

Read more: Natural lighting is the key to Apple’s remodeled Fifth Ave. store

Write comment (100 Comments)

In todaybrand landscape, consumers are rejecting traditional advertising in favor of transparent, personalized and most importantly, authentic communications. In fact, 86% of consumers say that authenticity is important when deciding which brands they support. Driven by this growing emphasis on brand sincerity, marketers are increasingly leveraging user-generated content (UGC) in their marketing and e-commerce strategies.

Correlated with the rise in the use of UGC is an increase in privacy-focused regulation such as the European Union industry-defining General Data Protection Regulation (GDPR), the along with others that will go into effect in the coming years, like the California Consumer Protection Act (CCPA), and several other state-specific laws. Quite naturally, brands are asking themselves two questions:

- Is it worth the effort to incorporate UGC into our marketing strategy?

- And if so, how do we do it within the rules, and more importantly, in adherence with the expectations of consumers?

Consumers seek to be active participants in their favorite companies& brand identity journey, rather than passive recipients of brand-created messages. Consumers trust images by other consumers on social media seven times more than advertising.

Additionally, 56% are more likely to buy a product after seeing it featured in a positive or relatable user-generated image. The research and results clearly show that the average consumer perceives content from a peer to be more trustworthy than brand-driven content.

With that in mind, we must help brands leverage UGC with approaches that comply with privacy regulations while also engaging customers in an authentic way.

Influencer vs user: Navigating privacy considerations in an online world

- Details

- Category: Technology

Read more: In a social media world, here’s what you need to know about UGC and privacy

Write comment (97 Comments)

Herea fun thing to look forward to next month. Simone Giertz, she of the shitty robots fame, will be appearing onstage at Disrupt SF (October 2-4) at the Moscone Center in San Francisco.

The U.S.-based Swedish inventor has built a massive online following (currently at 1.92 million YouTube subscribers) with DIY videos that examine technology and art through a whimsical lens.

Giertz is probably best known for her &shitty& robotic creations, including arms that serve soup and breakfast, draw holiday cards and apply lipstick — to hilariously uneven results. More recently, she had a verified viral hit when she busted out some power tools to turn her Tesla into a pickup truck.

She&ll be joining us onstage to walk us through some of her most interesting creations, including The Every Day calendar. The project, which made nearly $600,000 on Kickstarter late last year, is designed to help motivate users into developing good habits, like meditating, flossing or writing. Or, you know, eating churros.

Disrupt SF runs October 2 to October 4 at the Moscone Center in San Francisco. Giertz joins an outstanding lineup of speakers, including Kitty HawkSebastian Thrun, Admiral Mike Rodgers, Rachel Haurwitz of Caribou Biosciences, and Marc Benioff, BoxAaron Levie and dozens more.

Buy tickets here!

- Details

- Category: Technology

Read more: Roboticist and YouTube star Simone Giertz is coming to Disrupt SF (Oct. 2-4)

Write comment (92 Comments)Page 872 of 5614

10

10